Parallel Application Analysis in Exascale Computing

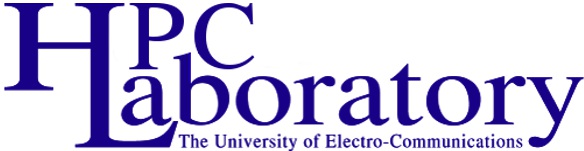

Understanding the dynamic behavior of parallel applications is difficult at an extremely large scale. Profiling and tracing are usually used for the performance analysis of parallel applications, but these approaches are unsuitable for large-scale applications because they need a targeted application to be executed at a targeted scale. We develop a novel technique for the performance analysis of large-scale applications. Our technique relies on runtime information prediction, which provides the predictive behavior of a targeted application executed at a large scale by using the dynamic behavior of the application executed at some small scale. This work is supported by JSPS (Japan Society for the Promotion of Science) KAKENHI.

Selected Publications

- S. Miwa, I. Laguna, and M. Schulz, PredCom: A Predictive Apporoach to Collecting Communication Traces, IEEE Transactions on Parallel and Distributed Systems, Vol. 32, Issue 1, pp.45-58 (2021).

Power Management in High Performance Computing Systems

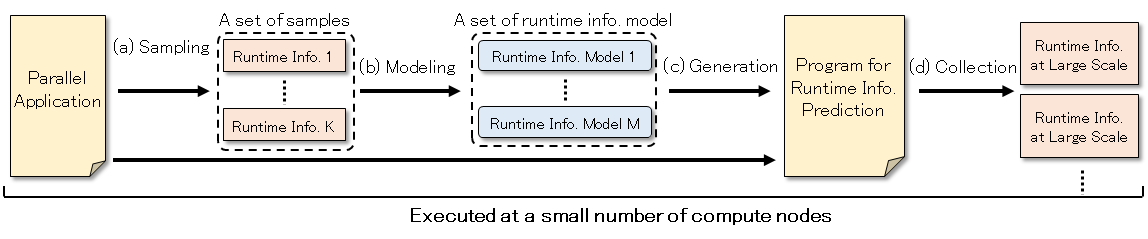

The future supercomputers are required for exascale computing power within 30 MW power constraints. For this purpose, we need to improve the power efficiency of various hardware components such as CPUs, GPUs, memories, and interconnects. Semiconductor chips such as CPUs and GPUs in the same SKU (Stock Keeping Unit) have power variation, which originates in manufacturing variability, and this feature helps to improve the energy efficiency of supercomputers. We assess the power variation on both CPUs and GPUs in various production systems and develop variation-aware job scheduling systems. This study is supported by KDDI Foundation and collaborated with Tokyo Institute of Technology.

Selected Publications

- K. Yoshida, R. Sageyama, S. Miwa, H. Yamaki, and H. Honda, Analyzing Performance and Power-Efficiency Variations among NVIDIA GPUs, The 51st International Conference on Parallel Processing (ICPP) (to appear) (acceptance rate: 84/311=27%).

Processor Architecture with Next-Generation Semiconductor Devices

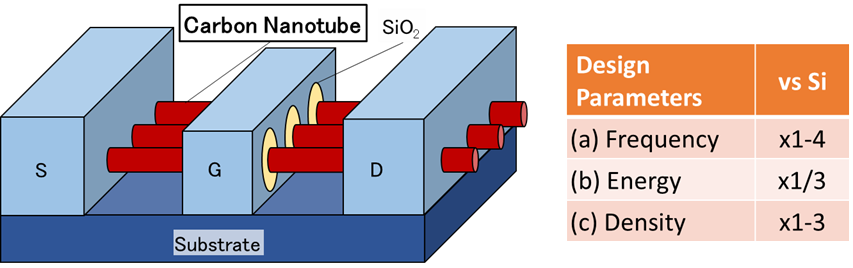

Modern processors are manufactured with silicon transistors and improve their performance as silicon transistors scale down; however, silicon transistor scaling is approaching the limit. Carbon nanotube transistors, which use nanometer-order carbon nanotubes as transistor channels, are considered a promising alternative to silicon transistors, and we, therefore, develop various design tools and architectures for processors manufactured with carbon nanotube transistors. We collaborate on this project with the University of Tokyo.

Selected Publications

- C. Shi, S. Miwa, T. Yang, R. Shioya, H. Yamaki, and H. Honda, CNFET7: An Open Source Cell Library for 7-nm CNFET Technology, The 28th Asia and South Pacific Design Automation Conference (ASP-DAC) (to appear) (acceptance rate: 102/328=31%).

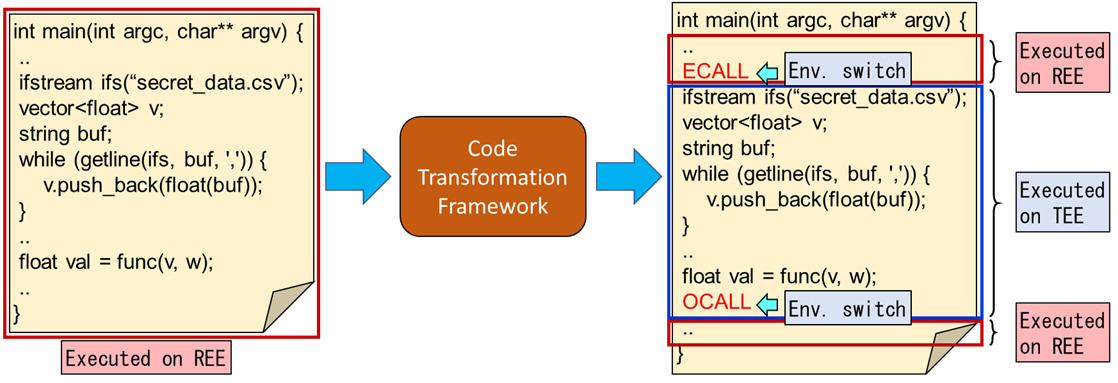

A Framework for Secure and High-Performance Computing Environment

Secure and high-performance computing systems are required as an ICT infrastructure for Society5.0. TEEs (Trusted Execution Environments) have been developed as very secure computing environments, but the execution on TEEs is slower than that of usual environments called REEs (Rich Execution Environments). For secure and high-performance computing, all secret operations within an application need to be executed on TEE, while the others need to be executed on REE. For this, we develop a code transformation framework that enables automatically extracting operations needed for TEEs from parallel applications written for REEs and generating codes running on REEs and TEEs concurrently. This study is supported by JST (Japan Science and Technology Agency) PRESTO.